Tokenization是将文本分为一组有意义的部分的过程. 这些片段叫做tokens. 例如, 我们可以将一大块文字划分为单词, 也可以将其划分为句子. 根据手头的任务, 我们可以定义我们自己的条件, 将输入文本分成有意义的标记. 我们来看看如何做到这一点.

怎么做...?

- 创建一个文件, 并定义一些简单的句子以供分析.

from nltk.tokenize import sent_tokenize

from nltk.tokenize import word_tokenize

# from nltk.tokenize import PunktWordTokenizer

from nltk.tokenize.punkt import PunktSentenceTokenizer

from nltk.tokenize import WordPunctTokenizer

text = ("Are you curious about tokenization? " +

"Let's see how it works! " +

"We need to analyze a couple of " +

"sentences with punctuations to see it in action.")

sent_tokenize_list = sent_tokenize(text)

print ("Sentence tokenizer:")

print (sent_tokenize_list)

print ("Word tokenizer:")

print (word_tokenize(text))

# Create a new punkt word tokenizer

punkt_sent_tokenizer = PunktSentenceTokenizer()

print ("Punkt word tokenizer:")

print (punkt_sent_tokenizer.tokenize(text))

word_punct_tokenizer = WordPunctTokenizer()

print ("Word punct tokenizer:")

print (word_punct_tokenizer.tokenize(text))

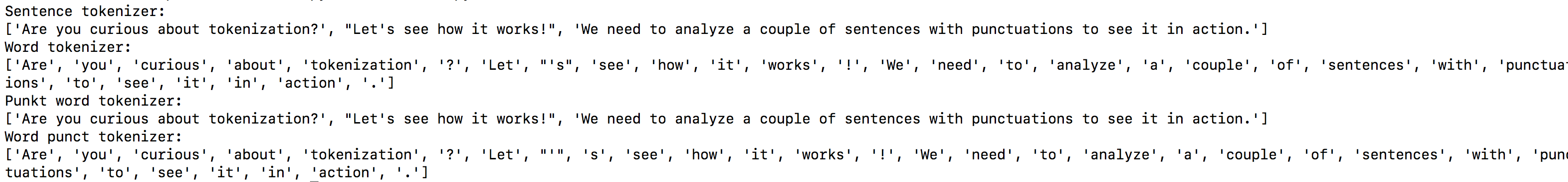

- 输出结果如下: